In modern enterprise operations, accelerating resolution times while focusing scarce human attention where it matters most is not optional. Predictive scoring loops power agent prioritization systems that nudge work to the right person at the right time. This implementation guide is written for decision makers who need a practical pathway from event streams to production scoring engines that integrate with orchestration routing to reduce mean time to resolution. You will find a clear breakdown of feature engineering techniques for event-driven signals, model retraining cadence and governance, confidence thresholds that drive routing logic, and operational patterns to measure impact on MTTR. Throughout, examples align to enterprise contexts where legacy systems, compliance, and scale are central concerns. If you lead digital transformation, this post will equip you to evaluate trade offs, define success metrics, and partner with providers to deploy predictive scoring loops that deliver rapid, measurable ROI.

Why predictive scoring loops matter for enterprise operations

Predictive scoring loops transform raw signals from systems and customers into a prioritized list of cases, incidents, or tasks. When your teams face high volumes of incoming events from monitoring, CRM, support platforms, or IoT devices, manual triage becomes a bottleneck. A scoring engine that runs continuously over event streams assigns a probability or score to each case. Those scores feed orchestration routers that decide which agent or automated playbook gets the case first. The result is fewer escalations, faster response, and lower mean time to resolution. Learn more in our post on Designing Playbooks: Template Library for Common Enterprise Use Cases.

For a Chief Digital Officer, the promise is measurable. Prioritization driven by predictive scoring loops can shift resource allocation dynamically, reduce idle time caused by noisy alerts, and increase first contact resolution. It also improves customer satisfaction by ensuring high-risk or high-value matters get immediate attention. Since these loops are data driven, they can be tuned to match business goals such as compliance windows, SLA adherence, or revenue impact.

Predictive scoring loops are particularly valuable when systems are distributed and event velocity is high. Instead of batch only analytics that lag reality, a loop that ingests streaming data and continuously refreshes scores creates an adaptive workflow. The loop closes the gap between detection and resolution by connecting scoring outputs to routing rules and automated remediations. For enterprises focused on operational efficiency, that is where digital transformation produces tangible returns.

Core components of a predictive scoring loop

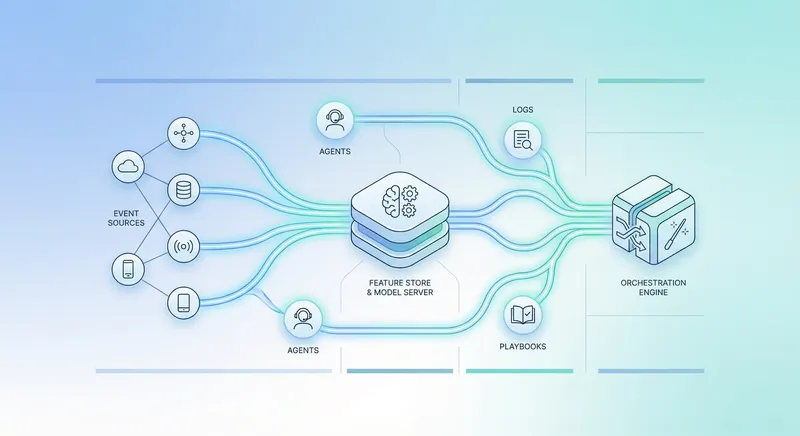

A robust predictive scoring loop includes five core components: event ingestion, feature store and engineering, scoring model, decision logic for routing, and continuous feedback. Event ingestion captures raw signals from logs, application events, customer interactions, telemetry, and external feeds. The feature store aggregates and materializes features for low latency scoring. The scoring model uses engineered features to compute probabilities or risk scores. Decision logic maps scores to actions via routing and orchestration. Finally, feedback channels capture outcomes and human corrections to close the loop and retrain models. Learn more in our post on Continuous Optimization: Implement Closed‑Loop Feedback for Adaptive Workflows.

Each component must be designed for the enterprise constraints of security, compliance, and scale. Event ingestion should support schema evolution and provenance. Feature engineering needs reproducibility so features computed in training match those computed in production. The scoring model requires explainability for operator trust. Decision logic must support deterministic fallbacks so that if a model fails or confidence is low, safe routing still happens. Feedback channels must collect labeled outcomes with timestamps to measure MTTR improvements and model health.

Architecturally, these pieces are often split between streaming platforms, feature stores, model serving layers, and orchestration engines. Integration points are critical. The scoring model must expose an API or event sink that the orchestration layer can query or subscribe to. Alternatively, a scoring microservice can push enriched events into an event bus that the router listens to. The design choice depends on latency needs. For MTTR reduction, aim for end-to-end latencies under the human attention window you are optimizing against.

Feature engineering from event streams: practical patterns

Feature engineering is the foundation of any high-performing predictive scoring loop. Because most enterprise events arrive as streams, producing stable, timely features requires careful design. Start by cataloging event sources and mapping each to likely predictive signals. Common event stream sources include system alerts, support ticket attributes, user behavior logs, transaction traces, and third party monitoring feeds. For each source, identify both instantaneous features and temporal aggregates.

Instantaneous features are values present on the incoming event. Examples are severity level, customer tier, error code, or device type. Temporal aggregates summarize recent activity and are often more predictive. Examples include counts of similar errors in the last 30 minutes, rolling means of response time, time since last resolved incident for the same user, or intensity of failed logins over the past hour. Use fixed window aggregates and exponentially weighted moving averages where appropriate to capture trend and volatility.

Normalization and encoding matter. Categorical values should be encoded consistently across producers. Avoid one-off encodings in production that do not match training. For numerical signals, apply transformations that stabilize variance. Where possible build derived signals that encode business context, such as an urgency score computed from SLA remaining time and impact level. A centralized feature registry helps maintain definitions, ownership, and lineage for each feature used in predictive scoring loops.

Ensure features can be materialized with low latency. Use stream processing frameworks to compute aggregates near real time and persist them into a feature store or cache. For features that are expensive to compute, consider approximations that preserve ranking quality. Finally, instrument metrics for feature freshness and coverage. If feature availability drops, the loop should degrade gracefully and fall back to robust features that are always present.

Common feature families and examples

- Signal features such as error codes, event types, and sensor readings.

- Temporal features like rolling counts, moving averages, and time since critical events.

- Contextual features including customer tier, contract SLA, and device geography.

- Derived business features such as estimated revenue at risk, compliance risk score, and escalation cost.

- Behavioral features from user actions or system automation frequency.

When building features for predictive scoring loops, account for missingness. Missing data often carries signal by itself. Encode missingness explicitly rather than imputing blindly. Document assumptions so that model retraining and feature refresh cycles keep the feature semantics consistent.

Model design and retraining cadence for live systems

Model design for production scoring balances accuracy, latency, and interpretability. Choose models that meet your latency and explainability requirements. For many routing decisions, gradient boosted trees or compact neural models provide strong accuracy with explainability tools. For very low latency or highly constrained environments, linear models with feature hashing can perform well. Model choice influences how you handle feature interactions and how you instrument confidence. Learn more in our post on Trust & Explainability: Building Explainable Agentic Systems That Executives Accept.

Retraining cadence is driven by data drift, concept drift, label availability, and business rhythm. For event-driven prioritization tasks, set an initial retraining cadence based on expected change velocity. A sensible starting point is weekly retraining for active systems and monthly for more stable domains. However, automate drift detection so that unexpected changes trigger retraining outside the regular cadence. Drift detection can monitor distribution shifts in key features, label drift, and degradation in ranking metrics.

Combine scheduled retraining with continuous model evaluation. Use shadow deployments to test new models against production traffic without affecting routing. In a shadow run, new model scores are computed in parallel and compared with the production model using offline and online metrics. Evaluate both offline ranking metrics and online operational metrics such as average time to acknowledgement and impact on escalation rates. Ensure rollback paths are automated and that model promotion requires passing predefined guardrails.

Keep training pipelines reproducible. Every retrained model should have an immutable artifact with training data hashes, hyperparameters, and feature versions. This traceability is essential for audits and for diagnosing issues when the scoring loop behaves differently after a model update.

Labeling strategies and cold start solutions

Labels for prioritization often come from human outcomes such as whether a case was escalated, whether an incident caused breach of SLA, or time to resolution. Create robust pipelines to capture these outcomes and align labels to the event timestamp. When early models need training and labels are sparse, use proxy signals such as escalation flags, complaint volume, or expert heuristics. Active learning can accelerate label collection by surfacing uncertain cases to human reviewers and feeding corrections back into training.

For new event types or lines of business, cold start is a reality. Use transfer learning from similar products or simple rule-based routing as a temporary baseline. As labeled outcomes accumulate, the model will gain predictive power. Update stakeholders on expected performance ramp and cadence so expectations are aligned.

Confidence thresholds, routing logic, and orchestration integration

Scores alone do not make decisions. Confidence thresholds map scores to deterministic routing actions. For predictive scoring loops, define multiple bands such as high confidence, medium confidence, and low confidence. Each band triggers a specific orchestrated action. For example, a high confidence high severity case could route directly to a senior on-call with an automated pre-brief. A medium confidence case might go to a triage queue with suggested playbooks. Low confidence cases may be batched or assigned to automated remediation flows.

Design thresholds with both precision and recall trade offs in mind. Tight thresholds reduce false positives but may miss urgent issues. Broader thresholds capture more true cases at the cost of human attention. Use simulation and cost matrices that quantify the business cost of missing a high severity event versus the cost of an unnecessary escalation. This allows you to set thresholds that optimize expected business impact rather than raw model metrics.

Integrate the scoring output with your orchestration engine using simple contracts. The scoring layer should emit a structured record that contains the score, confidence metric, top contributing features for explainability, and routing metadata. The orchestration engine consumes the record to apply routing rules and execute composable playbooks. Ensure the orchestrator supports conditional logic, parallelism, and rollback actions. Where possible, include circuit breakers so that if scoring is unavailable or confidence drops, safe fallback routing occurs.

Instrument the entire flow. Track how many cases are routed by each score band, time from routing to acceptance, automation success rates, and changes in MTTR correlated to score-based routing. These metrics feed back into model retraining and operational tuning of thresholds in predictive scoring loops.

Example routing bands and actions

- Score 0.9 and above: Direct route to senior responder, attach incident history, trigger virtual war room.

- Score 0.7 to 0.9: Route to triage team with suggested playbook and required SLA target.

- Score 0.4 to 0.7: Queue for automation attempts and human review if automation fails.

- Score below 0.4: Batch for periodic processing, low priority backlog handling.

Implementation roadmap and architecture patterns

Implementing predictive scoring loops is best done iteratively. Start with a discovery sprint to map event sources, label availability, and orchestration capabilities. Next create a minimum viable scoring loop that proves value quickly. An MVP can use a simple model and small set of features to route a subset of cases. Measure impact on MTTR and agent workload before scaling feature scope and model complexity.

A typical enterprise architecture includes a streaming layer that ingests events, a stream processor that computes real time features, a feature store for materialized features, a model server for low latency scoring, and an orchestration engine for routing. Data and control planes should be separated so teams can iterate without disrupting production. Provide a lightweight API between model server and orchestrator and define a message contract that includes score, confidence, feature snapshot, and trace identifiers for observability.

For organizations with strict security and compliance, host the scoring loop within a private cloud or VPC and ensure encryption in transit and at rest. Use role based access control for model promotion and configuration changes. If you use third party services, ensure contractual controls for data residency and audit logs. Maintain a model registry and change log so that audits can trace which model version handled a particular case.

Scale the system by partitioning scoring by tenant or region, horizontal autoscaling for model servers, and sharding the feature store. Monitor cost and latency trade offs. Use sample-based scoring where appropriate to reduce processing when event volumes spike, while ensuring critical classes of events always receive scores.

Operational metrics, evaluation, and business KPIs

Measuring the performance of predictive scoring loops requires both model centric and business centric metrics. Model centric metrics include AUC, precision at k, calibration, and ranking quality. Business centric metrics reflect operational outcomes such as mean time to acknowledgement, mean time to resolution, escalation rate, SLA compliance, customer satisfaction, and cost of handling. Tie model improvements to one or more business KPIs to justify investment and prioritize feature work.

Use counterfactual experiments and canary rollouts to measure causality. For example, route half of the eligible traffic using the scoring loop and the other half with the legacy routing rules. Compare MTTR, escalation rates, and customer impact across cohorts. Maintain statistical rigor by controlling for seasonality and workload changes. For fast moving environments, short rolling experiments help iterate quickly without exposing the whole organization to risk.

Track confidence calibration. When scores are well calibrated, a score of 0.8 should correspond to roughly 80 percent likelihood of the labeled outcome. Calibration affects how thresholds map to expected outcomes. Uncalibrated scores can lead to poor routing decisions and erosion of trust. Use calibration techniques in the training pipeline and monitor calibration drift in production.

Finally, quantify ROI with a clear cost model. Include the cost of human interventions avoided, SLA penalties averted, and customer churn reduction attributed to faster resolution. Present conservative and optimistic scenarios to stakeholders. Spin up live ROI dashboards that combine model health and business KPIs to give leadership a single view into the value produced by predictive scoring loops.

Governance, security, and operational resilience

Governance is critical in enterprise deployments. Establish model governance that defines owners, approval workflows for model promotion, and audit trails for configuration changes. For predictive scoring loops that influence routing and remediation, include human-in-the-loop controls during initial rollout and require sign off from stakeholders for high impact rules. Maintain a model risk register that outlines potential failure modes and mitigation plans.

Security practices should include encryption, secure key management, and least privilege access. Ensure that event data used for scoring is sanitized when it includes personal data. Where required by regulation, implement data retention policies and ensure deletions are propagated to training datasets and feature stores. Maintain lineage logs so that any request for data provenance can be honored quickly.

Operational resilience means planning for model unavailability and degraded feature completeness. Implement fallbacks to rule-based routing or simpler models when feature freshness falls below thresholds. Use health checks and circuit breakers between components so that the orchestrator can detect when to switch to fallback logic. Automate incident response playbooks that are triggered when model predictions diverge sharply from observed outcomes in a short window.

Document escalations and maintain runbooks that include steps to quarantine a model version, switch traffic, and restore prior behavior. Regularly test these playbooks in chaos engineering exercises so that teams are prepared when production surprises occur. These practices reduce both downtime and reputational risk when predictive scoring loops are operating at scale.

Scaling and continuous improvement for long term value

As adoption grows, both the model and the surrounding processes must scale. Introduce feature prioritization cycles to determine which new signals to onboard. Use cost benefit analysis to weigh compute cost of a new feature against expected improvement in decision quality. Implement automated feature tests that validate new features for drift, leakage, and performance before they are enabled in production scoring loops.

Promote a culture of continuous improvement. Run regular retrospectives that connect operational learnings to model updates. Encourage cross functional collaboration between data engineering, ML engineering, operations, and business owners to keep the scoring loop aligned with evolving priorities. Use observability to identify when a model no longer meets operational needs and accelerate retraining or feature updates accordingly.

For complex organizations, consider a federated model governance where central teams provide common infrastructure and guidelines while domain teams own feature sets and local model tuning. This balances speed of innovation with consistency and compliance across business units. Provide shared building blocks such as a feature registry, model serving templates, and orchestration playbook libraries to reduce duplication and time to value for new predictive scoring loops.

Conclusion

Predictive scoring loops are a powerful lever for enterprises seeking to reduce mean time to resolution and make human attention more effective. By converting event streams into prioritized, explainable scores and coupling those scores with orchestration rules, organizations can ensure that high impact cases receive the fastest and most skilled response. The path to production requires careful work across feature engineering, model lifecycle management, routing logic, and governance. Feature engineering must focus on stable, low latency signals that capture both instantaneous state and temporal context. Retraining cadence should balance scheduled updates with automated drift detection to maintain model relevance. Confidence thresholds translate score intent into deterministic actions, and integration with orchestration engines ensures those actions are executed safely and auditable. Operational metrics that tie model performance to MTTR and SLA outcomes are essential to demonstrate ROI and keep investment aligned with business priorities.

Practical deployments start small with an MVP that routes a subset of cases, proves impact, and then scales. Use shadow testing and canary rollouts to validate changes without risking broad disruption. Build observability into each stage of the loop so that feature freshness, model calibration, routing outcomes, and human overrides are visible in live dashboards. Governance must balance rapid iteration with controls around access, data handling, and model promotion so that enterprise risk is managed while innovation continues.

For Chief Digital Officers and technology leaders, the most successful predictive scoring loops are those that align technology with operational needs. Prioritize early wins that reduce MTTR in high cost or high risk areas, then reinvest gains into broader automation and agent orchestration. If you need support designing or deploying predictive scoring loops, A.I. PRIME combines AI consulting, workflow design, and orchestration deployment to accelerate outcomes. Our teams help map features from event streams, implement model lifecycles, design confidence thresholds and routing policies, and stand up governed production pipelines that deliver measurable MTTR reductions. Reach out to explore a tailored roadmap that aligns with your SLA targets and compliance requirements. With the right combination of engineering rigor and operational alignment, predictive scoring loops can become a durable competitive advantage that drives efficiency, compliance, and customer satisfaction.